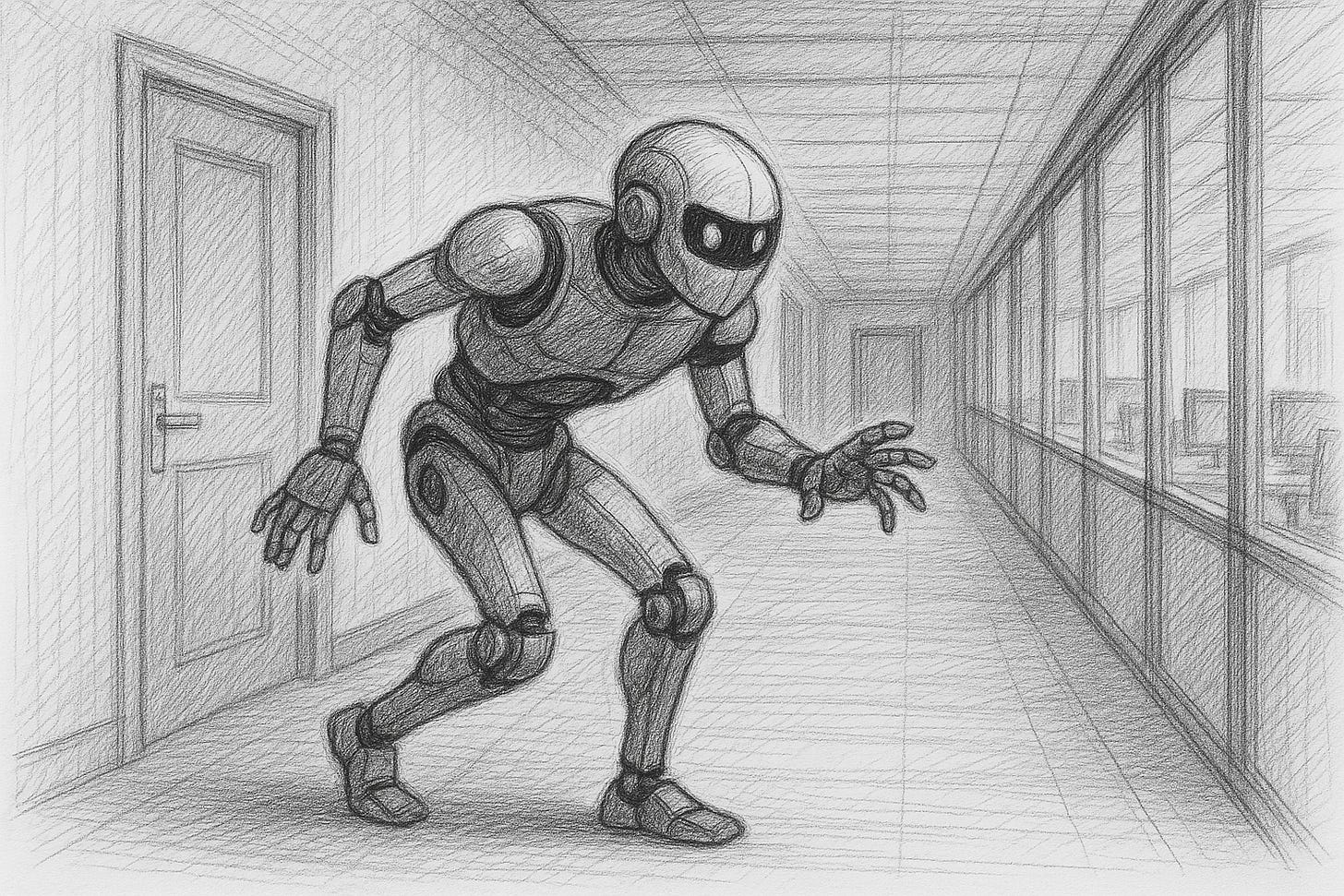

Okta’s Ric Smith: Your AI Agent Is Roaming The Office Hallway. Do You Know What It's Doing?

As companies rush to roll out AI agents, Okta's president of products and technology says they often overlook the governance.

This article is presented in partnership with Okta

As companies rush to deploy AI agents, they’re letting new ‘digital workers’ loose in their workplaces, often without much thought about the boundaries needed to keep them under control.

Okta president of products and technology Ric Smith believes it’s as if these companies are inviting new workers into the office without a background check.

“It becomes high risk,” Smith told me as in a recent interview.

Without proper governance, Smith says, you’re can’t be sure what these new bot employees will do as they walk your halls. They might reveal confidential employee records, executive communication, or potentially worse.

Here’s our full conversation covering the agent rollout’s new security questions, and what to do about them.

Alex Kantrowitz: Rick, what does the next 12 to 24 months of the AI agent rollout look like?

Ric Smith: We’re going to understand that we have a new employee that we’re not doing a background check on, and we’ve walked them into the office. That will be the big theme.

How much trust will we give those new ‘employees?’

Right now, it depends on the posture of the company. If you talk to a cybersecurity company like us, access is limited, so we have a lot of gates and controls that prevent tampering with sensitive materials within the company.

We know the scope of access for companies that don’t have that kind of hygiene, it becomes high risk. And we’ll likely — on the bottom end of that spectrum — see a lot of security incidents. We’re already seeing cyber attacks that are actually instrumented with AI, which means that any kind of perimeter that’s set up will be more challenged as a result of that.

Obviously we can assume that means more attacks on systems that are actually not secured. If you look at the development of the current language models, security was an afterthought, so we know that there’s a high degree of risk here.

So, can you help separate then where the hype and reality is in the AI agent conversation?

It’s proliferating. I’ve got one customer currently talking to us about deploying about twenty percent more agents than they have in their workforce, just to give you kind of a perspective.

People are really bought in on this. But they’re more bought in on the opportunity and not really evaluating the associated risk. And I don’t think that means you stop the opportunity, but we need to be pragmatic and make sure that — just like we would treat a human entering our organization — we need to know about them. We need to understand them. We have proper controls in place so that we limit access.

Just so I make sure I’m hearing you right, this company that you’re talking about had 20% more robot workers than human workers?

That’s their goal.

Do you think there’s going to be a day where we’re going to eventually see more AI agent workers than human workers within companies?

For sure. Let’s start with the valuation of these AI companies. Take accounting for example. Accounting software accounts for about 2% of accounting spend. The majority of what lives in the TAM of accounting is actually headcount. And so when we look at these AI opportunities, they’re actually looking at taking budget away from that headcount substantially, which then leads to how they start valuing the company overall. That’s actually the push of the industry. So we are actually looking at, how do we displace people to put these agents in place? With that, you can start backing into what companies are thinking as a result of that, because they’re being prompted by the same companies that are valued in this way. So they’re starting to think about it in that light as well.

So yeah, I think at some point we’re going to see companies that are, you know, a large percentage of agents, and there will be a differential in that depending on the kind of work the company does, some more labor intensive, requiring dexterity and fingers, that agents can’t do. But for knowledge workers, it is a real threat.

And do you think that we’re going to end up seeing more companies emerge because of the enabling technology like this?

For sure. In the private equity circles, what they talk about these days is that the OPEX of companies will go down because human resources will go down. And so the nirvana state for the PE firms, is that there’s only a few employees and mostly agents at play. Now we’re obviously a long ways from that. These conversations can be very aspirational, but we’ll probably get 70 or 80% there. Same was true with the .com bust and how the Internet evolved. Same was true with mobile. Same is true with cloud. It’s progressing, but it hasn’t taken over all of our workloads. We’ll probably see the same kind of thing in AI, and that’s not to that’s not to minimize the impact of AI, but that’s the reality of what we’re going to probably see.

How are firms going to manage all these robot workers, or AI agent workers?

It’s going to become an operational nightmare. And that’s one thing that we’re already starting to help with in terms of being able to at least discover them and understand the operations they’re performing. That’s step one. The next step is being able to actually limit all of those operations as they go. When you really think about it, if you can deploy and put these things out there, just like you can hire a person and have them in the hallways, the concept is very similar.

And what happens if they have access to every door?

Then you have trouble, and that’s why we’re here to help prevent

What’s the number one problem holding back the enterprise Ai rollout today?

You have a lot of teams deploying with no governance around them, and as a result of that, they’re giving something they can run a lot of interesting computations and actually take actions. They’re giving them access to critical systems. And as a result of that, you can get basic exposure to things like people’s salaries. Or customer information that shouldn’t be exposed if you’re actually not putting the right controls in.

You’re someone that speaks with the leaders of the companies that are thinking about this. So how do CEOs think about balancing the benefits from letting AI agents loose with the potential drawbacks?

In the C suite, the CEO sees the ability to actually grow faster. I can build more I can attain more revenue. They also see the cost reduction as well as the overhead reduction, because the presumption is that managing agents is easier than managing people, which there’s probably elements that are of that that are true.

It’s really the CISO, who’s also reporting into the board, from a compliance standpoint, who has the most concern. And so it’s the conversation between those two. It’s the balancing act right now in terms of, how fast do I grow? But how do I make sure that I’m reigning in control so that I don’t lose it one day?

How advanced would you say those conversations are at this point? Are they just getting started, or are they deep in it?

It depends on the company. I think one good thing about this technology motion versus others is that security has bubbled up to the top of concerns early which means that we have a lot of people that are wanting to do the right thing, obviously some companies that are actually trying to do the right thing and give customers the right tools to manage this. But I think it’s early days. Yeah.

Okay. Last one for you. When we think about what’s needed to make AI reach the next level, there’s really two schools of thought. One is, if you make the model better, you’ll be able to do more. The other school of thought is, the technology is good enough now, and it’s really just about orchestrating it. So from your perspective, what’s more important?

When we think about machines in any way, we think about perfection and precision. The reality is, when you hire somebody, you may desire those things, but we all know the truth isthat we as humans all have our faults and discrepancies.

So yes, the model will improve. But I think it’s more about getting the perception that the model actually only has to be statistically good to a certain extent to actually have an impact. We’re not shooting for perfect with models right now, we’re shooting for good enough so that it can actually offload work, just like we do when we hire people now.

On the orchestration aspect, you want the agent to be able to take complex actions. And the only way we’re going to be able to get to that is if you’re going through agentic workflows, where the agents can coordinate and use tool sets to take actions on behalf of humans or other agents, so that we can get an outcome out of it.

Rick, thank you

Appreciate it. Thank you, Alex,